The Rainbow Six Siege Breach and the Hidden Cost of Holiday Cyber Risk

Seasonal downtime is still prime time for attackers

For those who celebrate, it’s fair to say that the Christmas period is meant to be quiet. A time of rest, relaxation and spending time with loved ones. Offices should be empty, on-call rotas thin out, and for most people (our service desk excluded), attention shifts away from dashboards and alerts.

Now, perhaps in the most unsurprising news of the year, as a tech company, we have more than a few gamers in our ranks, which meant that the news over the weekend raised a few eyebrows.

Like many others, we watched as reports of a major breach affecting servers for the popular video game, Rainbow Six Siege, cut straight through that seasonal calm. Accounts were manipulated, in-game economies distorted, and Ubisoft (the makers) were forced into emergency server shutdowns and rollbacks to regain control.

Some may have read the news - and indeed the blog - and put it down to being “just a gaming issue”. Yet, for organisations running live services, it should be read as something else entirely. A textbook example of how cyber incidents rarely arrive at convenient times, and how the real cost of a breach goes far beyond what first appears on the surface.

This isn’t a hit piece on one game or its publisher; it’s a live and ongoing case study for what happens when systems are always on, attackers are always active, and defensive assumptions quietly weaken during periods of rest.

What Actually Happened

Video games have historically been a relatively safe space from cyber attacks, with perhaps the most well-known and damaging incident occurring in 2011, when the PlayStation Network was taken offline for 24 days.

At the time, around 70 million accounts were compromised, and UK regulators fined Sony £250,000, with UK authorities saying it "could have been prevented".

You may remember how Rockstar, the studio behind the much-anticipated Grand Theft Auto 6, was among a series of major companies targeted in a series of hacks in 2021 and 2022. That saw development footage of the upcoming game leaked, and a teenager was eventually sentenced in a UK court over the attack.

Equally, Ubisoft itself were breached, and customer data was stolen back in 2013, but all was calm in the almost 13 years since, until this weekend.

During the window, attackers gained unauthorised access to backend systems supporting Rainbow Six Siege. The compromise allowed them to manipulate live account data at scale. Players reported sudden injections of billions of in-game currency, which have a real-world value of around £9.5m, alongside unlocking items that should have been restricted, and account status changes, including false bans.

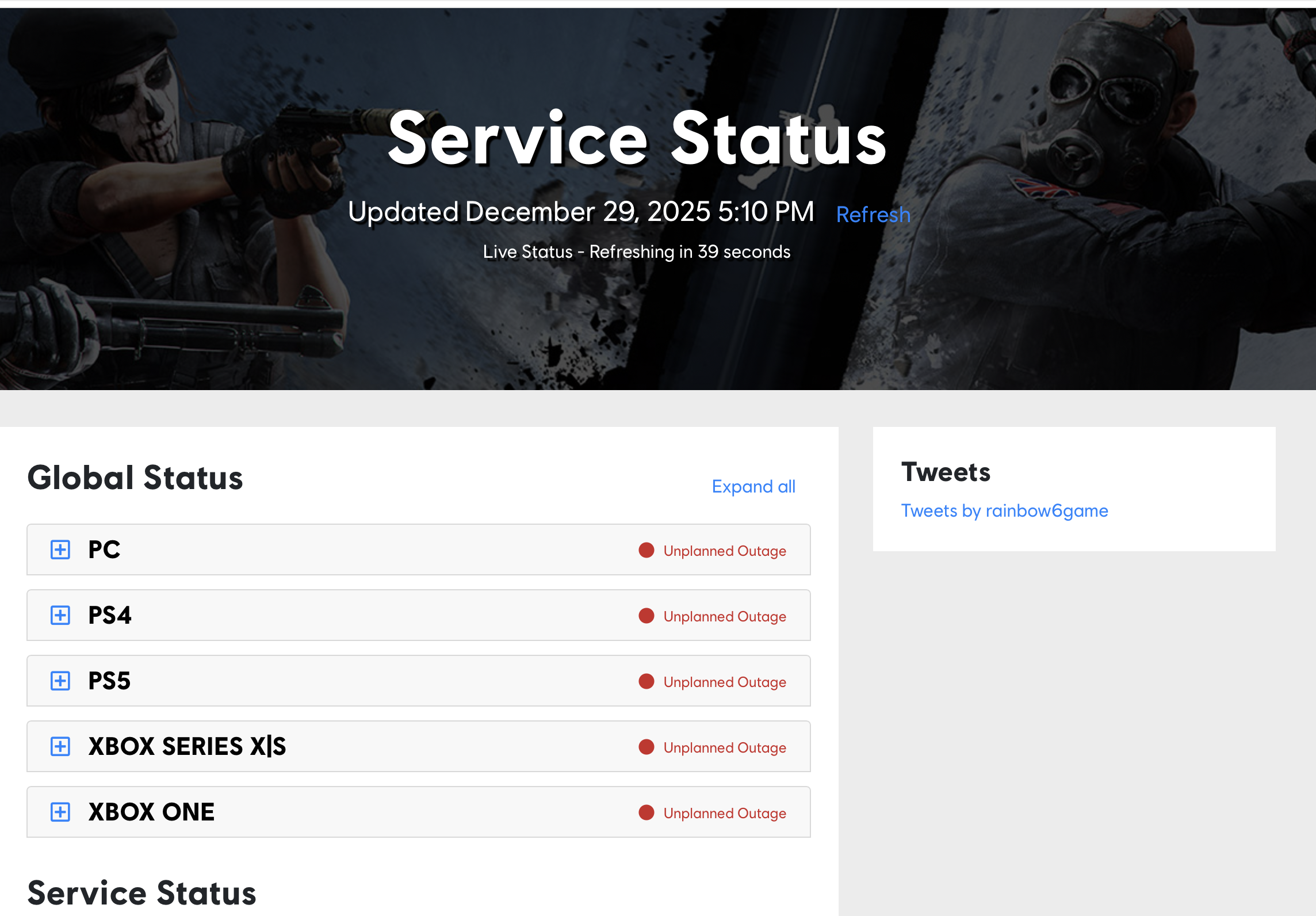

A look at what Ubisoft’s Service Status page shows, at the time of writing

It’s still very fresh, meaning we don’t know for definite who’s behind it yet, but from whispers, it seems that it could be as many as four individual hacker groups that were involved in the attack, which is linked to a new MongoDB vulnerability called ‘MongoBleed’ (and more than 87,000 other vulnerable instances of it could exist).

As yet unconfirmed reports on social media sites suggest that hackers had at least 48 hours of access to their servers, where they exfiltrated terabytes of internal source code, covering every release from the 1990s to projects currently being worked on.

Some media outlets are disputing this claim, but presumably if it’s true and a ransom isn’t paid, this data will find its way to the deep, dark corners of the web - or even be released en masse to the public.

The Immediate Aftermath

Despite not issuing much by way of a formal response to the attack yet, Ubisoft’s physical response was immediate and disruptive.

Servers were taken offline globally, while live services, including matchmaking and the in-game marketplace, were suspended. Crucially, the company announced that any progress or transactions made after a specific point would be rolled back as part of the recovery process.

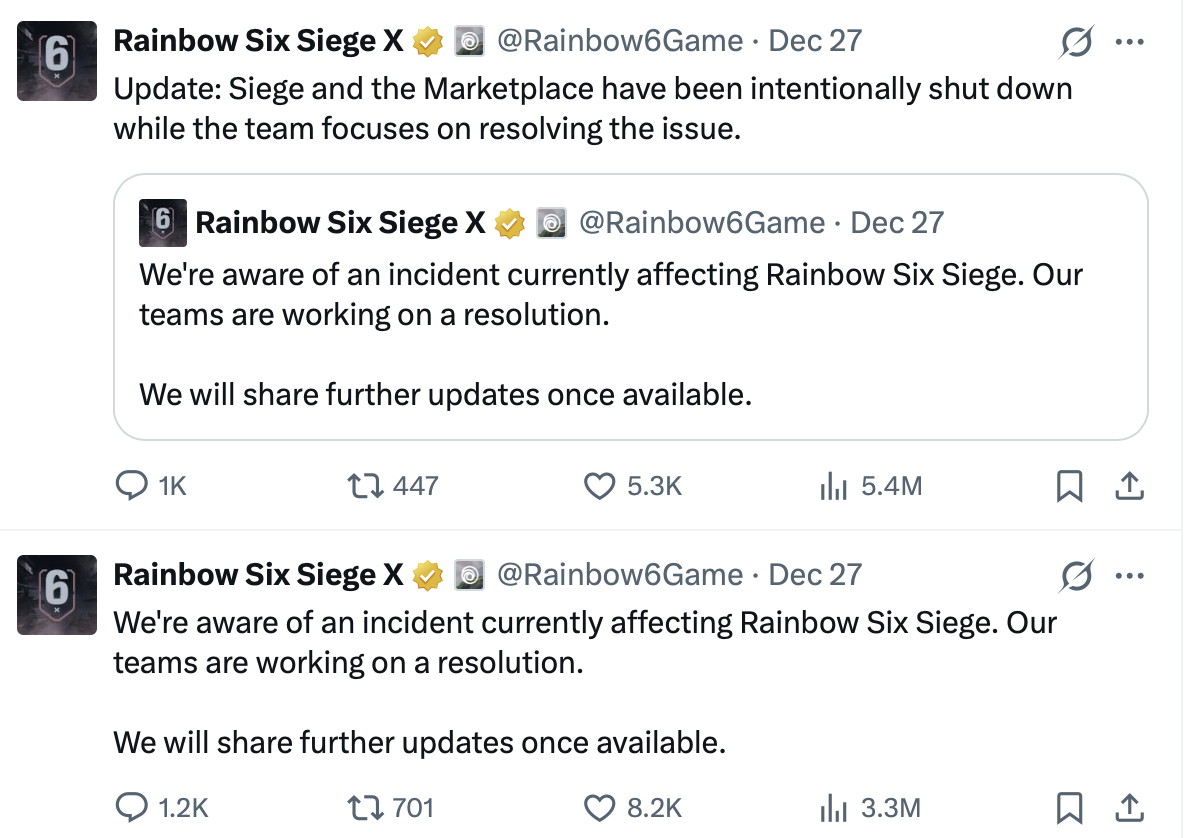

Their immediate updates once the incident was revealed (Taken from their X Account)

From a technical standpoint, what does that tell us? Well, it suggests several things. The breach was not limited to a single user or exploit chain. It touched systems central enough that Ubisoft could no longer trust the integrity of its live environment. Once trust in data integrity is lost, availability becomes secondary.

Or, in short, rollback wasn’t a choice.

It was the only viable option.

Why Rollback Is So Costly

From the outside, a rollback can look like a reset button. No harm, no foul - they kick out a backup, in effect, and it’s BAU... Right?

Internally, it’s anything but being that simple.

Rolling back live systems means reconciling data across multiple layers. User accounts, transaction logs, entitlement systems, and anti-fraud controls all have to align again. Anything that cannot be reliably verified has to be reversed, even if that means undoing legitimate actions taken by genuine users.

In business systems, the equivalent would be reversing financial transactions, customer updates, or operational records because they can no longer be trusted. The operational disruption alone is significant, but the reputational impact can be worse.

Consider the time of year: how many thousands, if not millions, of people will have gaming consoles, computers, or accessories that they were excited to use for games such as this, only to have them pulled from under their feet? What’s the cost of that real disappointment?

Similarly, a look at Ubisoft on Wikipedia shows that the ‘2023-Present’ era is titled ‘Financial Concerns and Reorganisation’, whilst, at the time of writing, their stock is down -50.44% YTD, so it’s fair to say that this is the last thing they needed to deal with.

Yet, the key lesson here is that incident response isn’t just about the perimeters you put in place to stop an attack. An equally important lesson to take away and consider is this: how do you go about restoring confidence in your systems, both internally and externally, after something that damaging happens?

Why Timing Matters More Than People Admit

This incident didn’t happen during a normal working week. Are we suggesting that if it had happened two or three weeks earlier, it wouldn’t have happened? Not at all. But the truth of the matter is that it landed during a holiday period when teams are smaller, response times are stretched, and escalation paths are slower by default.

Attackers understand this.

They don’t need insider knowledge to know when an organisation is most likely to be distracted - they’ve got calendars and know all too well how public holidays, weekends, and seasonal shutdowns have always been prime opportunities for exploitation.

The uncomfortable truth is that many organisations quietly accept reduced visibility during these periods. Monitoring may still be running, but the number of people interpreting signals, validating alerts, and making judgment calls is fewer.

If you take anything away from this, it should be this: cyber risk doesn’t scale down just because the calendar says it should.

The Real Cost Is Not the Obvious One

Much of the public discussion focused on the apparent value of the in-game currency that was injected. On paper, that £9.5m number looks dramatic and grabs headlines. In reality, it barely scratches the surface of the true cost.

The real cost sits elsewhere.

There is the engineering time required to investigate and remediate the breach. Then, there’s the loss of service availability, player frustration and, above all else, erosion of trust. And all of that’s before the internal distraction from roadmap work and long-term improvement - or the headache of the ransom that could be headed their way, if reports are correct.

Zoom out further still, and the picture becomes clearer.

Cybercrime, as a whole, is widely understood to cost the global economy trillions annually. Individual incidents don’t need to hit nine-figure losses to be serious. They simply need to interrupt operations, damage trust, or expose systemic weakness.

And the even harsher reality is that it’s only these big names that will make headlines due to the higher-sounding numbers.

Does that mean that you’re excluded from it if you don’t have something like an ‘Assassin’s Creed’, ‘Rayman’ or ‘Just Dance’ in your repertoire? It doesn’t.

It simply means you won’t capture the same audience, but the damage can, and will, be just as catastrophic, with the reputational damage and operational disruption outweighing the immediate financial impact of the breach itself.

Always-On Services Mean Always-On Risk

Gaming platforms sit at one extreme of the always-on model, but they are far from being the only ones. SaaS providers, cloud-hosted applications, customer portals, and managed services all share the same core exposure.

If users expect access at any time, attackers will test defences at any time, and this is something that frequently can, and does, keep our staff on their toes.

The Ubisoft incident reinforces a core principle that still catches organisations out, in that security controls are only as strong as their weakest operational moment. That includes people, unpatched servers, and gaps at nights/weekends/holidays.

Resilience is not just about preventing breaches.

It’s about detecting them early, containing them quickly, and recovering without compounding the damage.

What This Means for Businesses Outside Gaming

It would be easy to dismiss this as a niche issue. That would be a mistake.

Fundamentally, the mechanics are the same whether you are protecting player inventories or customer data. Compromised credentials, exposed interfaces, over-privileged systems, or unmonitored access paths all lead to the same outcome when exploited.

Businesses running online services should take several points away from this:

First, monitoring must be continuous, round the clock and actionable. If you’re getting alerts that no one is actively watching, they’re not controls. They’re noise.

Second, incident response plans must assume inconvenient timing. If your response depends on specific individuals being available, it’s fragile by design.

Third, rollback and recovery strategies should be tested in advance. Discovering that restoration is slow or incomplete during a live incident is too late.

Finally, communication matters. Clear, honest updates help preserve trust even when service availability is impacted.

A Final Thought

The Rainbow Six Siege breach is a reminder that cyber incidents rarely announce themselves politely. They arrive when guards are down, attention is elsewhere, and assumptions are unchallenged.

Christmas is a time of rest for people. It shouldn’t be a time for dealing with attackers.

For organisations, the lesson is simple but uncomfortable. If your systems are always available, your security posture has to be always ready. Preparation, resilience, and response planning are not seasonal activities; they’re essential year-round.

If you don’t know where your gaps lie, or how sturdy your defences are, you can be sure that when an incident arrives at your door, and it will, the difference between disruption and disaster has been decided long before it happens.

Or, why not reach out to us today to learn how we can protect you? After all, peace of mind is for life, not just for Christmas.